Autoscaling

- Introduction

- Cluster Autoscaler Kubernetes Tool

- HPA and VPA

- Cluster Autoscaler and Helm

- KEDA Kubernetes Event Driven Autoscaling

- Cluster Autoscaler and DockerHub

- Cluster Autoscaler in GKE, EKS, AKS and DOKS

- Cluster Autoscaler in OpenShift

- Scaling Kubernetes to multiple clusters and regions

- Kubernetes Load Testing and High Load Tuning

- Tweets

- Videos

Introduction

- levelup.gitconnected.com: Effects of Docker Image Size on AutoScaling w.r.t Single and Multi-Node Kube Cluster

- infracloud.io: 3 Autoscaling Projects to Optimise Kubernetes Costs Three autoscaling use cases:

- Autoscaling Event-driven workloads

- Autoscaling real-time workloads

- Autoscaling Nodes/Infrastructure

- blog.scaleway.com: Understanding Kubernetes Autoscaling

- infracloud.io: Kubernetes Autoscaling with Custom Metrics (updated) 🌟

- sysdig.com: Kubernetes pod autoscaler using custom metrics

- learnk8s.io: Architecting Kubernetes clusters — choosing the best autoscaling strategy 🌟 How to configure multiple autoscalers in Kubernetes to minimise scaling time and found out that 4 factors affect scaling:

- HPA reaction time.

- CA reaction time.

- Node provisioning time.

- Pod creation time.

- thenewstack.io: Reduce Kubernetes Costs Using Autoscaling Mechanisms

- cast.ai: Guide to Kubernetes autoscaling for cloud cost optimization 🌟

- thenewstack.io: Scaling Microservices on Kubernetes 🌟

- Horizontally scaling a monolith is much more difficult; and we simply can’t independently scale any of the “parts” of a monolith. This isn’t ideal, because it might only be a small part of the monolith that causes the performance problem. Yet, we would have to vertically scale the entire monolith to fix it. Vertically scaling a large monolith can be an expensive proposition.

- Instead, with microservices, we have numerous options for scaling. For instance, we can independently fine-tune the performance of small parts of our system to eliminate bottlenecks and achieve the right mix of performance outcomes.

- cloud.ibm.com: Tutorial - Scalable webapp 🌟

- containiq.com: Kubernetes Autoscaling: A Beginners Guide. Getting Started + Examples In this article, you’ll cover an overview of the autoscaling feature provided by Kubernetes. You’ll also explore how autoscaling works and how you can configure it.

- medmouine/Kubernetes-autoscaling-poster: Kubernetes autoscaling poster [PDF] 🌟

- medium.com/airbnb-engineering: Dynamic Kubernetes Cluster Scaling at Airbnb In this post, you’ll learn about how Airbnb dynamically size their clusters using the Kubernetes Cluster Autoscaler, and highlight the functionality they’ve contributed to the sig-autoscaling community

- chaitu-kopparthi.medium.com: Scaling Kubernetes workloads using custom Prometheus metrics

- medium.com/@niklas.uhrberg: Auto scaling in Kubernetes using Kafka and application metrics — part 1 In this article, you will find a case study on auto scaling long-running jobs in Kubernetes using external metrics from Kafka and the application itself.

- openai.com: Scaling Kubernetes to 7,500 Nodes

- thinksys.com: Understanding Kubernetes Autoscaling Types of Kubernetes Autoscaling:

- Horizontal Pod Autoscaler (HPA)

- Vertical Pod Autoscaler (VPA)

- Cluster Autoscaler

- medium.com/mindboard: What is Autoscaling in Kubernetes? Autoscaling is useful feature in Kubernetes that helps you to automatically adjust the number & resource consumption of pods in your deployment to meet the changing needs of your app.

- clickittech.com: Kubernetes Autoscaling: How to use the Kubernetes Autoscaler In this tutorial, you’ll install and test three different autoscaler on EKS:

- Horizontal Pod Autoscaler

- Vertical Pod Autoscaler

- Cluster Autoscaler

Cluster Autoscaler Kubernetes Tool

- kubernetes.io: Cluster Management - Resizing a cluster

- github.com/kubernetes: Kubernetes Cluster Autoscaler

- Kubernetes Autoscaling in Production: Best Practices for Cluster Autoscaler, HPA and VPA In this article we will take a deep dive into Kubernetes autoscaling tools including the cluster autoscaler, the horizontal pod autoscaler and the vertical pod autoscaler. We will also identify best practices that developers, DevOps and Kubernetes administrators should follow when configuring these tools.

- gitconnected.com: Kubernetes Autoscaling 101: Cluster Autoscaler, Horizontal Pod Autoscaler, and Vertical Pod Autoscaler

- packet.com: Kubernetes Cluster Autoscaler

- itnext.io: Kubernetes Cluster Autoscaler: More than scaling out

- cloud.ibm.com: Containers Troubleshoot Cluster Autoscaler

- platform9.com: Kubernetes Autoscaling Options: Horizontal Pod Autoscaler, Vertical Pod Autoscaler and Cluster Autoscaler

- banzaicloud.com: Autoscaling Kubernetes clusters

- tech.deliveryhero.com: Dynamically overscaling a Kubernetes cluster with cluster-autoscaler and Pod Priority

- medium: Build Kubernetes Autoscaling for Cluster Nodes and Application Pods 🌟

- Auto-Scaling Your Kubernetes Workloads (K8s) 🌟

- medium: Cluster Autoscaler in Kubernetes

- itnext.io: Kubernetes Resources and Autoscaling — From Basics to Greatness 🌟

- kubedex.com: autoscaling 🌟

- chrisedrego.medium.com: Kubernetes AutoScaling Series: Cluster AutoScaler 🌟

- hashnode.com: Proactive cluster autoscaling in Kubernetes | Daniele Polencic 🌟🌟 Scaling nodes in a Kubernetes cluster could take several minutes with the default settings. Learn how to size your cluster nodes and proactively create nodes for quicker scaling.

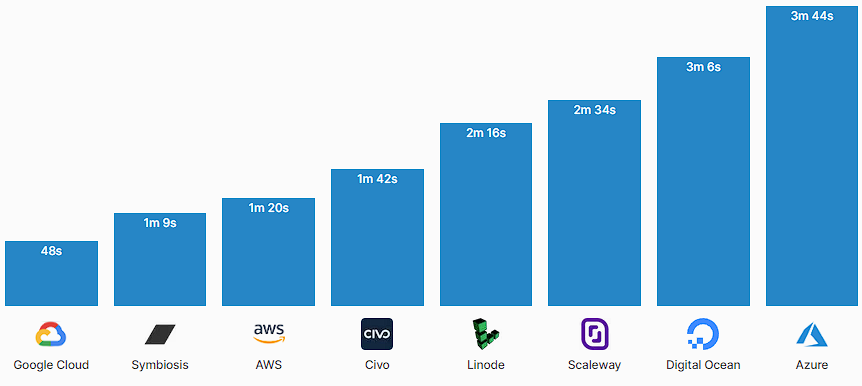

- symbiosis.host: Benchmarking Kubernetes node initialization In this benchmark, you will compare cluster initialization time across 8 managed Kubernetes providers

- Kubernetes nodes are slow to initialize. OS’s have to be booted, networks have to be configured, kubelets need to initialize, certificates need to be issued and approved, and so on…

- The unfortunate side effect is that cluster autoscaling is limited by the time it takes to add more nodes into the pool. If your environment sees a sudden spike in usage there might not be enough time to scale up to handle the additional load.

- This volatility in usage will impact the amount of additional capacity that is necessary for your cluster to function during high stress. For very bursty settings you will need to configure more headroom to account for the hightened variance.

- However, the faster nodes initialize the faster your cluster can react to these sudden spikes. So, not only can quick nodes reduce the risk of resource congestion, it also reduces the additional headroom you need to have on hand, leading to lower costs.

- In this benchmark we compared initialization time across 8 managed Kubernetes providers.

- the-gigi.github.io: Advanced Kubernetes Scheduling and Autoscaling

HPA and VPA

- HPA: Horizontal Pod Autoscaler

- VPA: Vertical Pod Autoscaler

- returngis.net: Escalado vertical de tus pods en Kubernetes con VerticalPodAutoscaler

- medium: Build Kubernetes Autoscaling for Cluster Nodes and Application Pods Via the Cluster Autoscaler, Horizontal Pod Autoscaler, and Vertical Pod Autoscaler

- itnext.io: Horizontal Pod Autoscaling with Custom Metric from Different Namespace

- Kubernetes autoscaling with Istio metrics 🌟 Scaling based on traffic is not something new to Kubernetes, an ingress controllers such as NGINX can expose Prometheus metrics for HPA. The difference in using Istio is that you can autoscale backend services as well, apps that are accessible only from inside the mesh.

- medium: ⅓ Autoscaling in Kubernetes: A Primer on Autoscaling

- around25.com: Horizontal Pod Autoscaler in Kubernetes 🌟

- superawesome.com: Scaling pods with HPA using custom metrics. How we scale our kid-safe technology using Kubernetes 🌟

- velotio.com: Autoscaling in Kubernetes using HPA and VPA

- kubectl-vpa Tool to manage VPAs (vertical-pod-autoscaler) resources in a kubernetes-cluster

- itnext.io: K8s Vertical Pod Autoscaling 🌟

- czakozoltan08.medium.com: Stupid Simple Scalability

- sysdig.com: Trigger a Kubernetes HPA with Prometheus metrics Using Keda to query #prometheus in order to automatically create a Kubernetes HPA

- cloudnatively.com: Understanding Horizontal Pod Autoscaling

- blog.px.dev: Horizontal Pod Autoscaling with Custom Metrics in Kubernetes 🌟 In this post, you’ll learn how to autoscale your Kubernetes deployment using custom application metrics (i.e. HTTP requests/second)

- awstip.com: Kubernetes HPA HPA, the short form Horizontal pod autoscaling, is nothing but a piece of software that dynamically scales the pods based on thresholds like CPU, Memory, HTTP requests (custom metrics).

- medium.com/@CloudifyOps: Setting up a Horizontal Pod Autoscaler for Kubernetes cluster

- betterprogramming.pub: Advanced Features of Kubernetes’ Horizontal Pod Autoscaler Kubernetes’ Horizontal Pod Autoscaler has features you probably don’t know about. Here’s how to use them to your advantage.

- code.egym.de: Horizontal Pod Autoscaler in Kubernetes (Part 1) — Simple Autoscaling using Metrics Server Learn how to use Metrics Server to horizontally scale native and JVM services in Kubernetes automatically based on resource metrics.

- medium.com/@kewynakshlley: Performance evaluation of the autoscaling strategies vertical and horizontal using Kubernetes Scalable applications may adopt horizontal or vertical autoscaling to dynamically provision resources in the cloud. To help to choose the best strategy, this work aims to compare the performance of horizontal and vertical autoscaling in Kubernetes. Through measurement experiments using synthetic load to a web application, the horizontal was shown more efficient, reacting faster to the load variation and resulting in a lower impact on the application response time.

- itnext.io: Stupid Simple Scalability

- faun.pub: Scaling Your Application Using Kubernetes - Harness | Pavan Belagatti

- dnastacio.medium.com: Infinite scaling with containers and Kubernetes The article starts with a recap of Kubernetes resource management and its core concepts of requests and limits. Then it discusses those static limits in the realm of pod autoscalers, such as HPA, VPA, and KPA.

- medium.com/@badawekoo: Scaling in Kubernetes _What, Why and How?

- pauldally.medium.com: HorizontalPodAutoscaler uses request (not limit) to determine when to scale by percent In this article, you will learn how the Horizontal Pod Autoscaler uses requests (and not limits) when computing the target utilization percentage to scale pods

- dev.to: Scaling Your Application With Kubernetes | Pavan Belagatti

- github.com/jthomperoo: Predictive Horizontal Pod Autoscaler Horizontal Pod Autoscaler built with predictive abilities using statistical models. Predictive Horizontal Pod Autoscalers (PHPAs) are Horizontal Pod Autoscalers (HPAs) with extra predictive capabilities baked in, allowing you to apply statistical models to the results of HPA calculations to make proactive scaling decisions.

- thenewstack.io: K8s Resource Management: An Autoscaling Cheat Sheet 🌟 A concise but comprehensive guide to using and managing horizontal and vertical autoscaling in the Kubernetes environment.

- waswani.medium.com: Autoscaling Pods in Kubernetes If you are hosting your workload in a cloud environment, and your traffic pattern is fluctuating in nature (think unpredictable), you need a mechanism to automatically scale out (and off-course scale in) your workload to ensure the service is able to perform as per defined Service Level Objective (SLO), without impacting the User Experience. This semantic is referred to as Autoscaling, to be very precise Horizontal Scaling.

- mckornfield.medium.com: Working with HPAs in Kubernetes How to make your Kubernetes workloads scale with a few simple steps

- code.egym.de: Vertical Pod Autoscaler in Kubernetes Learn how to use Vertical Pod Autoscaler (VPA) to vertically scale services in Kubernetes automatically based on resource metrics.

- faun.pub: Intelligently estimating your Kubernetes resource needs! In this tutorial, you will learn how to use the Vertical Pod Autoscaler and Goldilocks to guess the correct requests and limits for your Pods

- itnext.io: Kubernetes: vertical Pods scaling with Vertical Pod Autoscaler

- medium.com/@adityadhopade18: Mastering K8s Event Driven AutoScaling

Kubernetes Scale to Zero

- dzone: Scale to Zero With Kubernetes with KEDA and/or Knative This article reviews how Kubernetes provides the platform capabilities for dynamic deployment, scaling, and management in Cloud-native applications.

- dev.to/danielepolencic: Request-based autoscaling in Kubernetes: scaling to zero - linode.com: Scaling Kubernetes to Zero (And Back) In this article, you will learn how to monitor the HTTP requests to your apps in Kubernetes and define autoscaling rules to increase and decrease replicas for your workloads.

Cluster Autoscaler and Helm

- hub.helm.sh: cluster-autoscaler The cluster autoscaler scales worker nodes within an AWS autoscaling group (ASG) or Spotinst Elastigroup.

KEDA Kubernetes Event Driven Autoscaling

- keda.sh: Kubernetes Event-driven Autoscaling. Application autoscaling made simple. KEDA is a Kubernetes-based Event Driven Autoscaler. With KEDA, you can drive the scaling of any container in Kubernetes based on the number of events needing to be processed. https://github.com/kedacore/keda

- medium.com/backstagewitharchitects: How Autoscaling Works in Kubernetes? Why You Need To Start Using KEDA?

- partlycloudy.blog: Horizontal Autoscaling in Kubernetes #3 – KEDA

- thenewstack.io: CNCF KEDA 2.0 Scales up Event-Driven Programming on Kubernetes

- blog.cloudacode.com: How to Autoscale Kubernetes pods based on ingress request — Prometheus, KEDA, and K6 In this article, you will learn how autoscale pods with KEDA, Prometheus and the metrics from the ingress-nginx. You will use k6 to generate the load and observe the pod count increase as more requests are handled by the ingress controller.

- medium.com/@toonvandeuren: Kubernetes Scaling: The Event Driven Approach - KEDA In this article, you’ll discuss two different approaches to automatic scaling of your apps within a Kubernetes cluster: the Horizontal Pod Autoscaler and the Kubernetes Event-Driven Autoscaler (KEDA) - youtube: Application Autoscaling Made Easy With Kubernetes Event-Driven Autoscaling (KEDA)

- Dzone: Autoscaling Your Kubernetes Microservice with KEDA Introduction to KEDA—event-driven autoscaler for Kubernetes, Apache Camel, and ActiveMQ Artemis—and how to use it to scale a Java microservice on Kubernetes.

- tomd.xyz: Event-driven integration on Kubernetes with Camel & KEDA 🌟 Can we develop apps in Kubernetes that autoscale based on events? Perhaps, with this example using KEDA, ActiveMQ and Apache Camel.

- faun.pub: Scaling an app in Kubernetes with KEDA (no Prometheus is needed)

- itnext.io: Event Driven Autoscaling KEDA expands the capabilities of Kubernetes by managing the integration with external sources allowing you to auto-scale your Kubernetes Deployments based on data from both internal and external metrics.

- medium.com/@casperrubaek: Why KEDA is a game-changer for scaling in Kubernetes KEDA makes it possible to easily scale based on any metric imaginable from almost any metric provider and is running at a massive scale in production in the cloud at some of the largest corporations in the world.

- levelup.gitconnected.com: Scale your Apps using KEDA in Kubernetes

- blog.devops.dev: KEDA: Autoscaling Kubernetes apps using Prometheus

- purushothamkdr453.medium.com: Event driven autoscaling in kubernetes using KEDA

- medium.com/@rtaplamaci: Horizontal Scaling on Kubernetes Clusters Based on AWS CloudWatch Metrics with KEDA In this article, you will learn how to use KEDA to horizontally scale the workloads running in a Kubernetes cluster based on the custom metrics exposed via AWS CloudWatch

- medium.com/@hirushanonline: Dynamic Scaling with Kubernetes Event-driven Autoscaling (KEDA)

- kedify.io: Prometheus and Kubernetes Horizontal Pod Autoscaler don’t talk, KEDA does

- github.com/kedacore/keda/issues/2214 Scaler for Amazon managed service for Prometheus

- opcito.com: A guide to mastering autoscaling in Kubernetes with KEDA

- dev.to/vinod827: Scale your apps using KEDA in Kubernetes

Cluster Autoscaler and DockerHub

Cluster Autoscaler in GKE, EKS, AKS and DOKS

- Amazon Web Services: EKS Cluster Autoscaler

- Azure: AKS Cluster Autoscaler

- Google Cloud Platform: GKE Cluster Autoscaler

- DigitalOcean Kubernetes: DOKS Cluster Autoscaler

Cluster Autoscaler in OpenShift

- OpenShift 3.11: Configuring the cluster auto-scaler in AWS

- OpenShift 4.4: Applying autoscaling to an OpenShift Container Platform cluster

Scaling Kubernetes to multiple clusters and regions

Kubernetes Load Testing and High Load Tuning

- itnext.io: Kubernetes: load-testing and high-load tuning — problems and solutions

- engineering.zalando.com: Building an End to End load test automation system on top of Kubernetes Learn how we built an end-to-end load test automation system to make load tests a routine task.

- thenewstack.io: Sidecars are Changing the Kubernetes Load-Testing Landscape Sidecars don’t just capture traffic. They can replay it as well. They can also transform any metadata, like timestamps, before it sends it to your application.

- medium.com/teamsnap-engineering: Load Testing a Service with ~20,000 Requests per Second with Locust, Helm, and Kustomize

- containiq.com: Kubernetes Load Testing | 8 Tools & Best Practices If you want to understand your Kubernetes application, performance testing is crucial. In this post, you’ll look at the value of performance testing, how to get started, and testing tools.

Tweets

Click to expand!

☁️ Knowledge - Vertical vs Horizontal scaling 📈

— Simon ☁️ (@simonholdorf) October 2, 2021

Vertical scaling: Increase the power of machines. E.g. upgrade from 4 vCPU to 8 vCPU --> Scaling Up ✅

Horizontal scaling: Add more machines. E.g. 3 web servers instead of 1 --> Scaling Out ☑️